Exploratory Data Analysis: Techniques for Data Understanding

What is Exploratory Data Analysis (EDA)?

Exploratory Data Analysis, or EDA, is a fundamental step in the data analysis process. It involves examining datasets to summarize their main characteristics, often using visual methods. The goal is to uncover patterns, spot anomalies, and test hypotheses before diving into more complex analysis.

Without data, you're just another person with an opinion.

Think of EDA as the initial investigation phase, akin to a detective gathering clues before solving a mystery. By engaging with the data visually and statistically, analysts can gain insights that inform subsequent steps in analysis. This stage is crucial for ensuring that the data is understood in context.

With EDA, you can ask specific questions about your data and explore relationships between variables. It sets the stage for deeper analyses, making it an indispensable tool in any data scientist's toolkit.

Key Techniques in EDA: Descriptive Statistics

Descriptive statistics provide a summary of the data’s main features through numerical calculations. Common measures include mean, median, mode, and standard deviation, which help to describe the central tendency and spread of the data. These metrics offer a foundational understanding of what your data looks like at a glance.

For instance, if you're analyzing test scores, knowing the average score (mean) alongside the highest (max) and lowest (min) scores can reveal a lot about student performance. Descriptive statistics serve as a starting point, guiding further exploration and analysis. They can highlight trends and outliers that might be worth investigating more deeply.

Exploratory Data Analysis (EDA) Basics

EDA is essential for summarizing datasets visually and statistically to uncover patterns and insights.

However, while descriptive statistics provide valuable insights, they don't tell the whole story. It's important to complement these numbers with visual methods to capture the essence of the data.

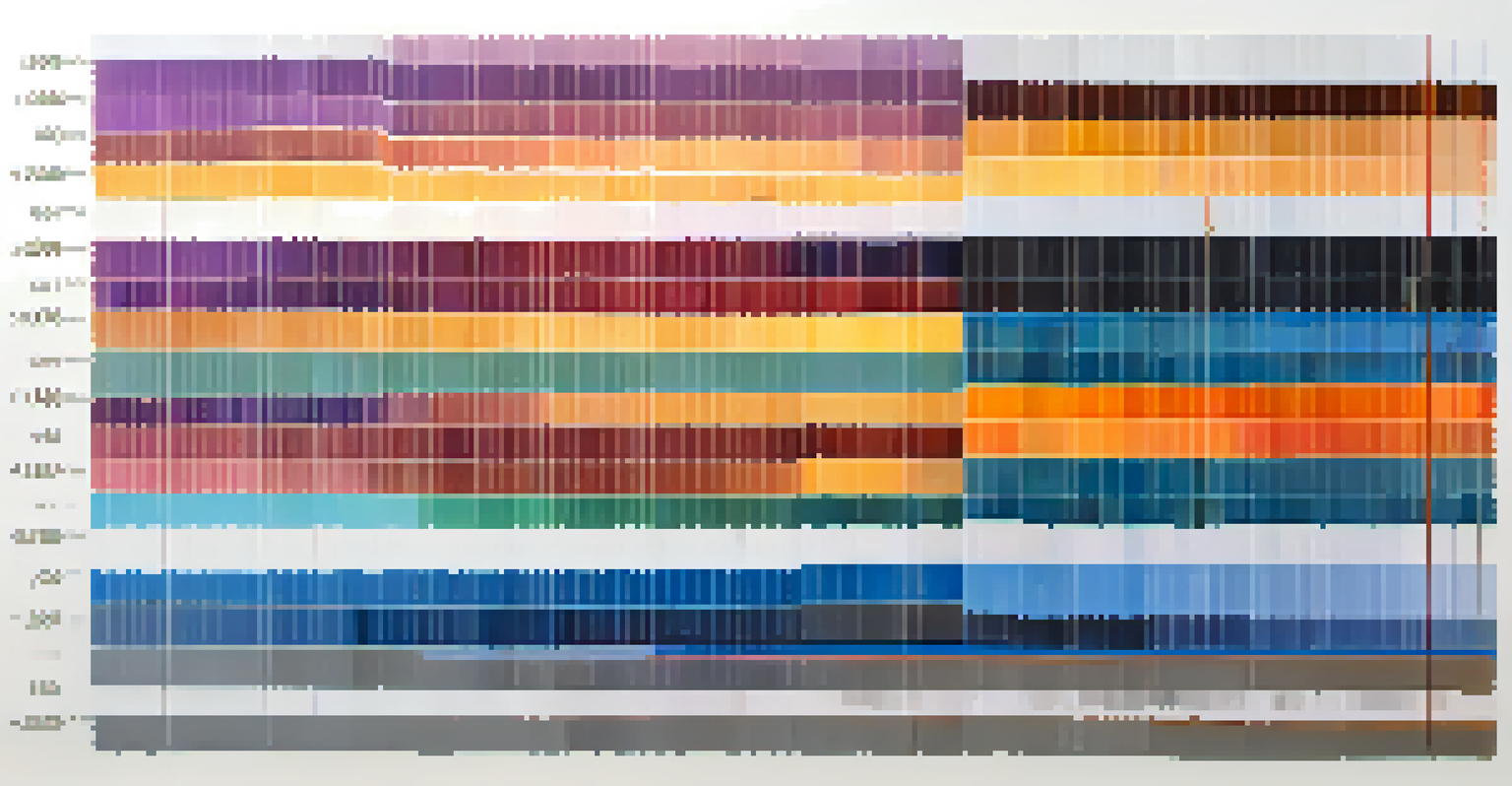

Visualizing Data: The Power of Graphs and Charts

Visualizations are a powerful component of EDA, transforming complex datasets into understandable graphics. Charts, such as bar graphs, histograms, and scatter plots, allow analysts to quickly see patterns and relationships in the data. This visual approach often reveals insights that numbers alone might miss.

Data is the new oil. It’s valuable, but if unrefined it cannot really be used.

For example, a scatter plot can show the correlation between two variables, helping you identify trends that might not be obvious from descriptive statistics. Visual tools can also highlight outliers—data points that deviate significantly from the norm—prompting further investigation. By engaging with the data visually, you foster a more intuitive understanding.

Incorporating visualizations into your analysis not only aids in understanding but also in communicating findings effectively to stakeholders. It’s about making the data story come alive.

Identifying Outliers: Why They Matter

Outliers are data points that stand apart from the rest of the dataset, and identifying them is a crucial part of EDA. These anomalies can indicate errors in data collection or signal important insights worth exploring. For instance, a sudden spike in sales data might highlight an unexpected marketing success or an error in data entry.

By visualizing data using box plots or scatter plots, you can easily spot these outliers. Once identified, it’s important to investigate their cause: Are they valid data points that reveal valuable information, or do they represent noise that should be discarded? Understanding outliers can significantly impact your overall analysis and conclusions.

The Role of Visualizations in EDA

Visual tools like charts and graphs help analysts identify trends and relationships in data, enhancing understanding.

Ignoring outliers can lead to misleading interpretations, so they should never be overlooked. Instead, embrace them as opportunities for deeper inquiry.

Assessing Data Distribution: Histograms and Density Plots

Understanding the distribution of your data is essential in EDA, and histograms are a great tool for this. A histogram displays the frequency of data points across ranges, giving you a snapshot of how the data is spread. This visualization helps identify common patterns, such as normal distribution, skewness, or bimodality.

Density plots offer a smoother alternative to histograms, highlighting the underlying distribution of the data without the binning effect seen in histograms. These plots can help visualize trends and the likelihood of data points occurring within certain ranges. They are particularly useful when comparing distributions across multiple groups.

By assessing data distribution, you can make informed decisions about which statistical tests to apply later. It’s all about understanding the landscape of your data.

Correlation Analysis: Exploring Relationships Between Variables

Correlation analysis is a technique used to evaluate the strength and direction of the relationship between two variables. This is often visualized through scatter plots, where you can see how one variable changes in relation to another. A strong correlation might suggest a predictive relationship, while a weak correlation could indicate no relationship at all.

For instance, if you're examining the relationship between hours studied and exam scores, a positive correlation would suggest that more study hours lead to higher scores. This insight can help educators identify effective study practices. However, it’s essential to remember that correlation does not imply causation; just because two variables are correlated doesn’t mean one causes the other.

Understanding Data Distribution Matters

Assessing data distribution through histograms and density plots is crucial for informed statistical decision-making.

Exploring correlations is vital for understanding complex datasets, allowing analysts to uncover meaningful relationships that guide decision-making.

Feature Engineering: Enhancing Data for Better Insights

Feature engineering involves creating new input features from existing data to improve model performance and insights. This process can include transforming raw data, generating interaction terms, or encoding categorical variables. By enhancing the dataset, you can uncover patterns that might not be immediately visible.

For example, if you're analyzing customer purchase behavior, you might create a 'recency' feature that measures how recently a customer made a purchase. This new feature can provide valuable insights into customer loyalty and behavior. Feature engineering requires creativity and domain knowledge, as the right features can significantly enhance model accuracy.

Ultimately, investing time in feature engineering can pay off, providing richer insights and more accurate predictions in your analysis.